Funny thing about escalators for big Las Vegas casinos: the escalators lead you in but not out, its not something you are likely notice while you are being carried up into the building, but you’ll sure notice it when you try to leave. There are no clocks on the walls to remind you of the passing time, and every convenience is designed to keep you satisfied and betting more. This analogy perfectly encapsulates how AI is reshaping our digital ecosystems. Just like the casino ensures you lose more money, AI is poised to reinforce the dominance of established tech giants while breaking down the agreements that once supported content creators, independent journalists, small businesses, and educators.

Google’s recent actions highlight this shift. By allowing AI to generate answers from website content after search queries, the traditional traffic-based revenue model that many websites relied now is a fading dream. Content creators are seeing their traffic dwindle as AI provides direct answers, bypassing the need for users to visit the original sources-This may not be a bad thing Google search has been getting worse for years. Similarly, Microsoft has aggressively integrated AI into its products, exemplified by the inclusion of an AI key on their new Surface laptops—a feature that could become the digital equivalent of the casino’s one-way escalator, locking users into their ecosystem with unprecedented convenience and control.

Copilot key on new Surface laptop

These developments point to a future where AI integration is not just widespread but deeply embedded within the major platforms. This article explores how the rapid and deep integration of AI, particularly with Microsoft Copilot and Google’s Gemini for Workspace, is reshaping our digital landscape. By bundling these AI tools with their platforms, these tech giants are creating ecosystems that are increasingly difficult for users to escape, raising concerns about competition, innovation, and user autonomy.

The overall trend seems to be pretty clear, enterprise customers require protections with AI integrations, and they will get them for a price. The rest of us will get it basically for free, but will seek to leverage every bit of data, to train new models, to build custom systems to each user, and in extreme cases exploit data for sale to brokers and advertisers.

Historical Precedents of Over-Bundling

1. Microsoft and Internet Explorer

In the late 1990s, Microsoft bundled Internet Explorer with its Windows operating system, effectively stifling competition from other browsers like Netscape Navigator. This bundling practice led to a landmark antitrust lawsuit where the U.S. Department of Justice argued that Microsoft’s actions were anti-competitive. The court’s decision in favor of the DOJ highlighted how over-bundling can harm consumers by reducing their choices and stifling innovation in the software market.

2. Apple, iTunes, and Google Android Apps

Apple’s bundling of iTunes with its iOS devices created a tightly controlled ecosystem that limited consumers’ ability to use alternative music and media services, leading to higher prices for digital media and stifling competition. Similarly, Google has faced scrutiny for its bundling practices with Android, particularly the requirement for manufacturers to pre-install Google apps to access the Google Play Store. This practice restricted consumer choice and reduced the ability of alternative app developers to compete on a level playing field. The European Union fined Google for these anti-competitive practices, highlighting the negative impact of over-bundling on the market. The Digital Markets Act in the EU is a sign that these practices are becoming an issue for multiple stakeholders.

Arguments for Forcing Unbundling of AI

1. Promoting Competition and Innovation

Unbundling AI from operating systems and platforms would level the playing field for third-party developers. This would encourage competition, leading to a more vibrant ecosystem of AI solutions. Consumers would benefit from a wider array of innovative products and services tailored to their specific needs, rather than being limited to the default offerings from tech giants.

2. Enhancing Consumer Choice and Control

Allowing users to choose and integrate their preferred AI solutions would empower them with greater control over their digital experiences. This flexibility would enable consumers to select AI tools that best align with their privacy preferences, functional requirements, and ethical standards, rather than being forced to use a pre-installed, potentially less suitable AI.

3. Ensuring Better Data Privacy and Security

When AI solutions are bundled with operating systems and platforms, users often have limited visibility and control over how their personal data is collected, stored, and used. By unbundling AI, consumers could choose AI providers that prioritize data privacy and transparency. This would mitigate the risks associated with centralized data collection and potential misuse, giving users greater confidence in how their personal information is handled.

4. Preventing Market Dominance and Abuse

Forcing the unbundling of AI would help prevent major tech companies from leveraging their dominance in operating systems and platforms to extend their control over the AI market. This separation would reduce the risk of anti-competitive practices, such as preferential treatment for their AI products or exclusion of rivals, ensuring a more open and competitive market landscape.

5. Facilitating Easier Rollback and Regulation

Integrating AI deeply into operating systems and platforms makes it harder to decouple and regulate these technologies. By keeping AI as a separate, optional component, it becomes easier for regulatory bodies to implement and enforce policies that protect consumer interests. Additionally, it allows for more straightforward updates and rollbacks, ensuring that users are not locked into potentially harmful or obsolete AI systems.

Extrapolating Dark Patterns with Bundled AI – Microsoft

Microsoft and Explore/Edge to Copilot

Microsoft Edge has certainly improved leaps and bounds over its predecessor, Internet Explorer. However, the aggressive tactics Microsoft employs to push users into using Edge raise significant concerns. It’s not just about offering a better product; it’s about ensuring that product is unavoidable. As the default browser in Windows, Edge is often the first point of contact when setting up a new PC. Here’s a closer look at how Microsoft has maneuvered to dominate your browsing experience — and how similar tactics could be employed with AI integrations like CoPilot in Windows and Office.

Microsoft’s Edge Dark Patterns

1. Hijacking Chrome Tabs

Users reported – starting up after a Windows update only to find all Chrome tabs mysteriously opening in Edge. This happens without any explicit consent to import data or change settings. Users find themselves disoriented, suddenly using Edge without realizing it. This stealthy takeover of browser sessions is both intrusive and deceptive, undermining user autonomy.

2. Forcing Links to Open in Edge

Despite setting a preferred browser, Microsoft has forced links from applications like Outlook and Teams to open in Edge. This disregard for user preferences is a clear tactic to drive more traffic through their ecosystem, effectively cornering users into using Edge regardless of their choice.

3. Malware-like Pop-ups

Windows 11 users have reported seeing pop-ups urging them to switch their default search engine to Bing. These prompts appear outside the normal notification system, mimicking the behavior of malware. This kind of aggressive nudging disrupts user workflows and creates a sense of intrusion, further pushing users towards Microsoft’s services.

Projecting to AI: Dark Patterns with CoPilot Integration

With the integration of CoPilot AI into Windows and Office, we can anticipate similar manipulative tactics. Here are potential scenarios:

1. Default AI Interactions

Copilot is already set as the default (not easy to opt out) AI assistant across all interactions in Windows and Office. Users attempting to utilize alternative AI solutions might find their requests automatically redirected to Copilot, much like how Edge hijacks browser preferences. This forced interaction reduces user choice and stifles competition.

2. Exploiting Data Collection

Deeply integrating Copilot into core applications allows extensive data collection, leveraging user data to enhance Microsoft’s AI capabilities. Opting out could become a cumbersome process, leading to greater surveillance and reduced privacy. Users would have limited control over how their data is used, prioritizing corporate gains over user rights.

Expanding on Data Exploitation: A Black Mirror Scenario

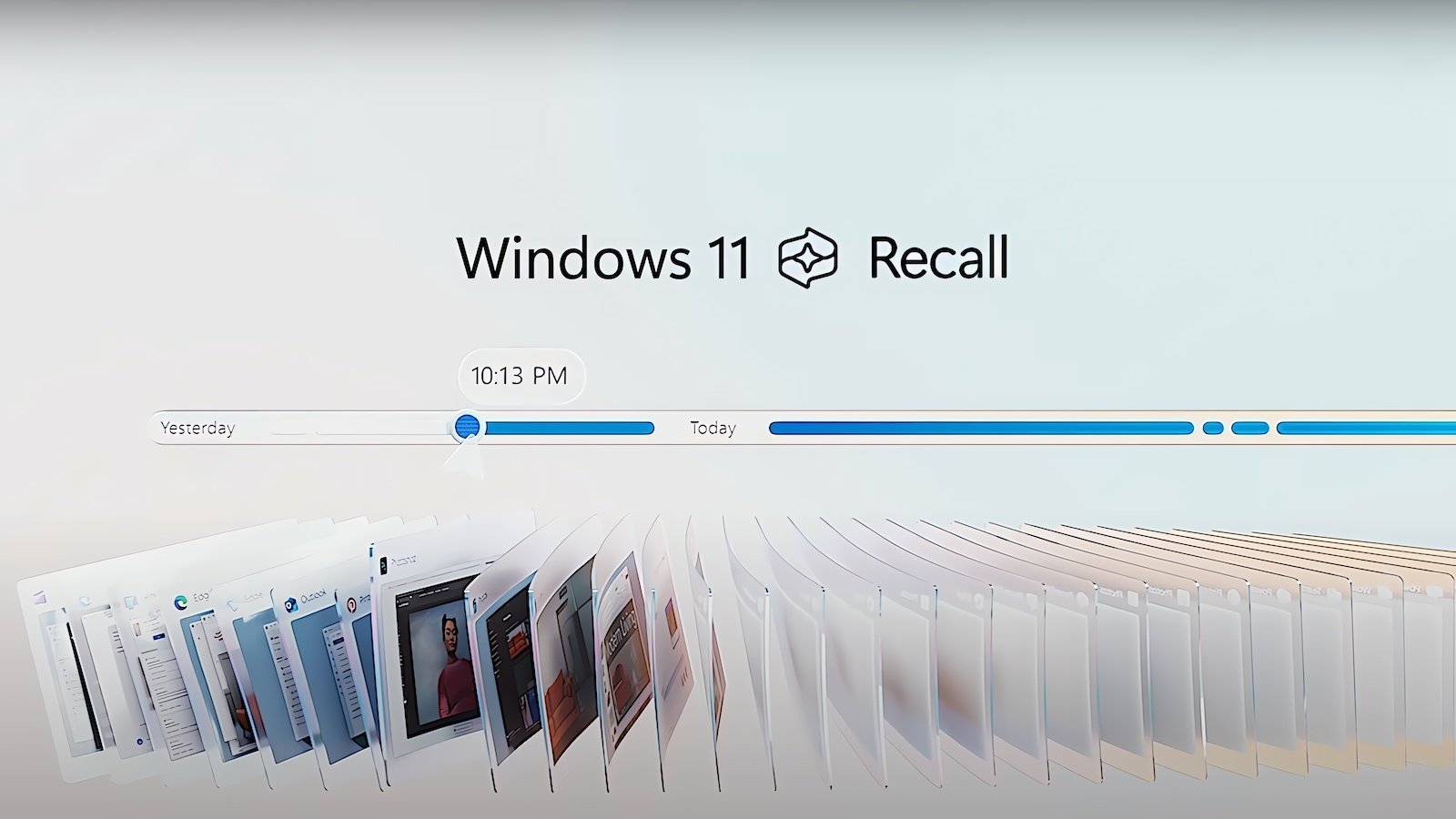

Windows Recall — a new AI-powered search function designed to record all user actions on a PC, taking snapshots of the screen at 5-second intervals. This is more than just tracking web activity; it’s local, pervasive surveillance.

Windows Recall: A Photographic Memory for Your PC

On May 21, 2024, Microsoft announced Windows Recall as part of its AI features for high-powered Copilot+ PCs. This feature aims to give users a “photographic memory” by recording everything they do on their PC, generating a searchable timeline of their interactions across applications, websites, documents, and more. Recall captures screen snapshots every 5 seconds, allowing users to search for any past interaction. Need to find a work document or a chat with a friend? Recall makes it possible. Yusuf Mehdi, Microsoft’s EVP and CMO, stated, “We set out to solve one of the most frustrating problems we encounter daily — finding something we know we have seen before on our PC.” The ability to instantly find lost data or recall useful websites can significantly enhance efficiency. Business users inundated with data could benefit from an assistant monitoring their actions and helping them locate information quickly.

While Microsoft assures that snapshot data is stored and processed locally, privacy concerns remain. Users can control what’s recorded and stored, delete snapshots, and pause recording. However, the prospect of hackers accessing Recall data raises significant security issues. Ensuring the safety of this data is crucial for user trust.

3. Intrusive AI Prompts

Frequent and disruptive prompts encouraging users to rely on CoPilot for various tasks could become the norm. These prompts, similar to the invasive pop-ups for Edge and Bing, could appear during routine activities, pushing users to depend on CoPilot for everything from email composition to scheduling. Such interruptions could degrade the user experience and force reliance on Microsoft’s AI.

US House Blocks Microsoft Copilot Over Security Fears

The US House of Representatives has prohibited its staff from using Microsoft’s Copilot AI tools due to major concerns about data privacy and security. Catherine Szpindor, the Chief Administrative Officer of the House, issued the directive to safeguard against potential data leaks to unauthorized cloud services. This decision is in line with previous restrictions on the use of ChatGPT by House staff, emphasizing ongoing apprehensions about data privacy and the risk of exposing sensitive information.

Key Points:

- Data Privacy Concerns: The Office of Cybersecurity identified Microsoft’s Copilot as a risk due to the possibility of leaking sensitive House data to unapproved cloud services.

- Government-Specific AI Needs: The decision highlights the necessity for AI solutions that meet government security requirements, resulting in a temporary ban until a secure, government-specific version of Copilot is available.

- Historical Data Leaks: Past incidents, such as Samsung’s accidental data leaks into ChatGPT and a bug in OpenAI’s system that exposed user chat histories, underscore the potential dangers of using AI tools in sensitive settings.

- Sovereign AI Considerations: The House’s action aligns with the broader concept of sovereign AI, which stresses the importance of developing AI models using secure, domestic data to protect national interests.

- Future Evaluation: The House CAO’s office intends to review Microsoft’s upcoming government edition of Copilot, designed to meet higher security standards and address these concerns.

This cautious stance by the US House of Representatives underscores the critical need for stringent security measures and customized AI solutions when dealing with sensitive data, particularly within government institutions.

Extrapolating Dark Patterns with Bundled AI – Google

Google’s AI Strategy: Leverage and Lock-In

Introduction

A recent leaked memo from a Google researcher, shared on a public Discord server and verified for authenticity, reveals a candid view of Google’s AI strategy and its competitive stance against open-source initiatives and rivals like OpenAI. The document, titled “We Have No Moat, And Neither Does OpenAI,” provides a glimpse into Google’s internal deliberations and the challenges they face. This memo sheds light on the company’s intent to leverage its ecosystem to maintain dominance, hinting at future strategies that could include more aggressive tactics for bundling AI, such as Gemini for Workspace.

Leveraging the Ecosystem

The memo underscores Google’s recognition that their traditional advantages are eroding in the face of rapid open-source innovation. Despite Google’s substantial resources, open-source models are quickly matching and sometimes surpassing their capabilities. To counter this, Google plans to exploit its vast ecosystem. By deeply integrating AI into their existing platforms, Google aims to create a seamless, indispensable experience that locks users into their ecosystem. This strategy isn’t new but signals a more intensive focus on ensuring that their AI services become the default across all applications and devices.

Dark Patterns and AI Integration

Given the context provided by the memo, it’s plausible to extrapolate that Google may employ similar dark patterns to those seen with Microsoft’s promotion of Edge, but this time with AI. For instance, integrating Gemini into Workspace could see Google:

- Default AI Integration: Gemini could become the default assistant in all Google Workspace apps, making it challenging for users to switch to alternative AI tools. This could be similar to how Microsoft forces links to open in Edge, regardless of user preferences.

- Persistent AI Prompts: Users might encounter frequent prompts encouraging them to use Gemini for various tasks, from drafting emails to managing schedules. These intrusive prompts could disrupt workflows and push users towards relying on Gemini, much like the invasive pop-ups for Bing in Edge.

- Local Data Exploitation: With features similar to Windows Recall, Google could introduce AI tools that capture and analyze user actions locally. This might involve taking snapshots of user activity across Workspace apps, ostensibly to improve productivity but also to gather extensive data on user behavior. This level of surveillance, while positioned as a convenience, raises significant privacy concerns.

Memo Highlights and Implications

Here are five key takeaways from the leaked Google memo:

- Open Source Threat: Google acknowledges that open-source models are advancing rapidly, solving problems that Google still struggles with, and doing so at a fraction of the cost.

- Ecosystem Leverage: To counter the open-source threat, Google plans to leverage its existing ecosystem more aggressively, ensuring that their AI tools are deeply integrated and harder to replace.

- Speed and Flexibility: Open-source innovations are outpacing Google’s efforts due to their speed and flexibility. Small, iterative improvements and community contributions are driving significant advancements.

- Privacy Concerns: The potential for AI tools to capture extensive user data locally poses privacy risks, highlighting the need for transparent and secure data practices.

- Collaborative Approach: The memo suggests that Google should consider collaborating more with the open-source community, learning from their innovations to avoid reinventing the wheel.’

Google is the original data addict, taking wherever and whenever possible, AI will not only allow then to gather more of it, but this memo suggest they are highly incentivize to, to train new models and stay competitive. Which means leveraging existing users for data, thus a fast and hard push for integrated AI systems.

A Healthier AI-Integrated Future: Empowering Users with Choice and Privacy

In an increasingly AI-driven world, it’s crucial to envision a future where the integration of AI benefits individuals and the overall economy. Here’s an alternative approach that ensures a healthier AI landscape:

1. Easy Switching Between AI Partners Across Platforms

Imagine a world where your AI assistant isn’t clingy or possessive, and you can switch it out as easily as changing your browser. Say goodbye to AI monogamy and hello to a dynamic digital life where your tech truly works for you.

- Seamless Transition: People should have the ability to switch between AI partners effortlessly, regardless of the platform they are using. This flexibility would empower users to choose the best AI for their needs without being locked into a single ecosystem.

- Interoperability: Ensuring AI tools are compatible across different platforms encourages competition and innovation, leading to better services and products for users.

2. Leveraging Open Source Models for Local Privacy-Sensitive Tasks

Why send your data off to some far-flung data center when you can keep it close to home? By running AI locally with open-source models, you get the best of both worlds: robust security and a smaller carbon footprint. It’s like having your cake and eating it too, without the guilt.

- Enhanced Security: Utilizing open-source AI models to run privacy-sensitive tasks locally can significantly improve security. By processing data on personal devices, users can mitigate the risk of data breaches and unauthorized access.

- Reduced Carbon Footprint: Local processing reduces the need for extensive cloud computing resources, thereby lowering the carbon footprint associated with data centers. This approach supports sustainable AI practices.

- Community Collaboration: Open-source models benefit from community contributions, leading to continuous improvements and transparency in how the AI operates.

3. Ensuring AI Models Prioritize User Interests

Ever feel like your AI is a bit too eager to keep you scrolling or clicking? In a user-centric AI future, your digital assistant works for you, not the platform. No more sneaky nudges—just honest, helpful guidance that respects your time and preferences.

- User-Centric AI: It’s essential that AI models are designed to prioritize the user’s interests over the platform’s preferences. This includes preventing AI from nudging users in ways that serve the platform’s agenda, such as keeping users engaged for longer periods.

- Transparency and Control: Users should have transparency in how AI models make decisions and the ability to control and customize their AI’s behavior to align with their personal preferences and goals.

- Ethical AI Practices: Platforms should adhere to ethical AI practices that respect user autonomy and promote fair, unbiased interactions.

The Benefits

By focusing on these principles, we can create a future where AI serves the best interests of individuals and society, promoting a healthier, more equitable digital ecosystem.

- Empowered Individuals: Users gain more control over their AI tools, enhancing their digital experience and trust in technology.

- Economic Growth: A competitive AI landscape fosters innovation, leading to economic growth and the development of new industries and job opportunities.

- Sustainable Development: Local processing and reduced reliance on massive data centers contribute to environmental sustainability, aligning with global efforts to combat climate change.

By focusing on these principles, we can create a future where AI serves the best interests of individuals and society, promoting a healthier, more equitable digital ecosystem.

What the average consumer can do

The golden rule is simple: don’t put all your eggs in one basket. Ask yourself: if your Apple or Google account were taken from you, could you survive? If the answer is no, it’s time to start trading some of your convenience for peace of mind. Here are some practical steps you can take to diversify your digital life and maintain control:

- Diversify Your Purchases: Avoid relying on a single platform for all your media needs. Instead of buying all your music and movies from iTunes, explore other options like Amazon, Google Play, or Spotify. This ensures that you have access to your content even if one service becomes unavailable.

- Use Different Cloud Services: If you use Microsoft Office, consider using an alternative cloud storage service like Google Drive or Dropbox instead of OneDrive. This reduces your dependency on a single company’s ecosystem for all your storage and productivity needs.

- Mix and Match Software: Don’t limit yourself to the default software provided by your operating system. If you’re using Windows, try alternative web browsers like Brave instead of Edge. On macOS, explore different productivity tools beyond those offered by Apple.

- Explore Open-Source Options: Use open-source software whenever possible. These options often provide greater transparency and control over your data. Open-source platforms like LibreOffice for productivity, VLC for media playback, and Nextcloud for cloud storage can be excellent alternatives to proprietary solutions.

- Be Cautious with AI Integration: Evaluate the AI tools you use and consider alternatives that prioritize user privacy and data security. For instance, if you’re concerned about privacy, look for AI assistants that run locally on your device rather than those that rely heavily on cloud processing.

- Support Smaller and Independent Developers: Choose products and services from smaller or independent developers when possible. This not only supports innovation but also helps maintain a diverse and competitive market.

- Stay Informed and Advocate for Change: Keep yourself updated on the latest developments in technology and digital rights. Support organizations and initiatives that advocate for consumer rights, data privacy, and open standards.

By diversifying your digital habits and making informed choices, you can help foster a more open and competitive digital landscape. This not only benefits you as a consumer but also promotes innovation and fairness in the technology sector.

About the Author

Eric Hawkinson

Learning Futurist

erichawkinson.com

Eric is a learning futurist, tinkering with and designing technologies that may better inform the future of teaching and learning. Eric’s projects have included augmented tourism rallies, AR community art exhibitions, mixed reality escape rooms, and other experiments in immersive technology.

Roles

Professor – Kyoto University of Foreign Studies

Research Coordinator – MAVR Research Group

Founder – Together Learning

Developer – Reality Labo

Community Leader – Team Teachers

Chair – World Immersive Learning Labs